A Framework for Auditing Multilevel Models using Explainability Methods

Multilevel models are commonly used for binary classification within hierarchical structures, demanding transparent and ethical applications. This paper proposes an audit framework for assessing technical aspects of regression MLMs, focusing on model, discrimination, and transparency/explainability. Contributors like inter-MLM group fairness and feature contribution order are identified, with KPIs proposed for their evaluation using a traffic light risk assessment method. Different explainability methods (SHAP and LIME) are employed and compared for transparency assessment. Utilizing an open-source dataset, model performance is evaluated, highlighting challenges in popular explainability methods. The framework aims to aid regulatory conformity assessments and support businesses in aligning with AI regulations.

Key Points:

- Introduce an audit framework for technical assessment of regression MLMs

- Identify contributors and propose KPIs for model evaluation

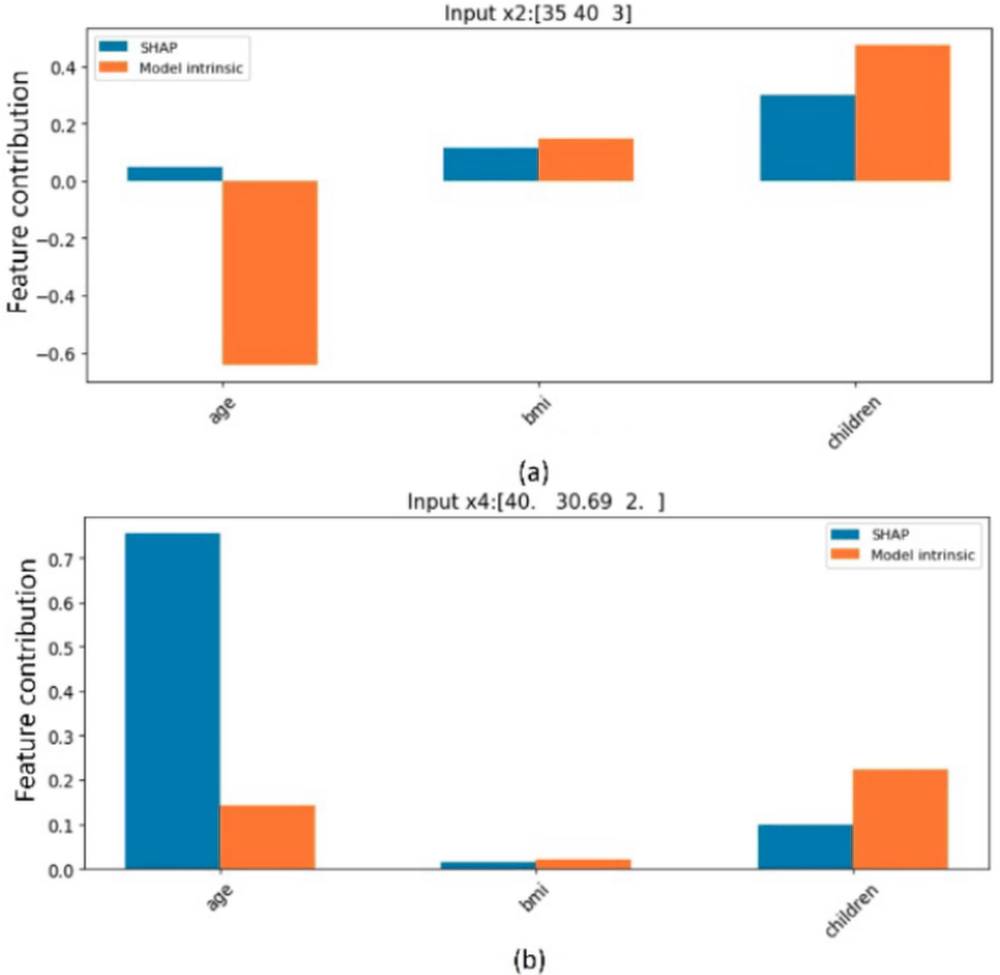

- The most commonly used explainability methods such as SHAP and LIME occasionally provide incorrect feature contribution magnitudes